Sap Rfc Sdk 7.11 Unicode Download For Mac

Welcome at the SAP on IBM i update! This time we will focus on the replacement of the ASCII NW RFC SDK in ILE by a UNICODE version: Many of you already have recognized that there are several versions of the NW RFC SDK available for OS/400, while there is. Hi Jeevitha, as you can read in SAP note 825494, the classic RFC library is replaced by the netweaver rfc library (see note 1025361). Newer operating systems will be supported with the netweaver rfc.

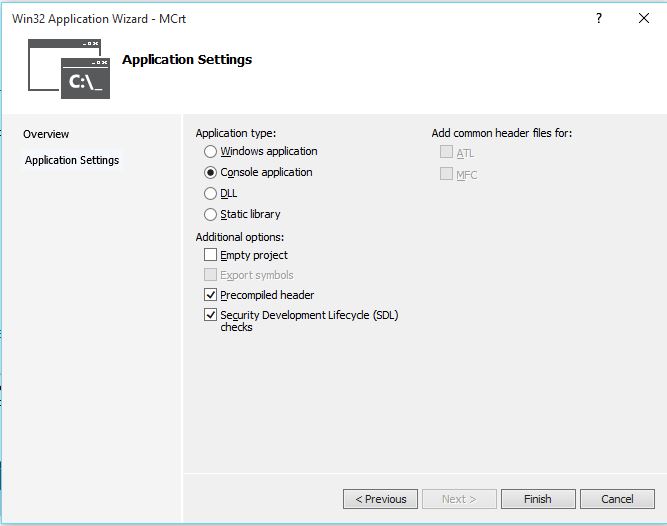

Disp+work process starts than dies in SAP R/3 4.7 Enterprise server I stopped SAP R/3 4.7 ent server for backup, when i restart the Microsoft management console the disp+work process turns yellow to grey. Can any one help me to solve this problem??? O/s:Windows2000 server database:SAPDB 7.3 Here iam attaching. According to SAP Note 1581595, you plan to install NW RFC SDK on the server where SAP system is running. Session 1: Download NW RFC.

This week SAP released the next generation of C/C based RFC connectivity toolkits: the SAP NetWeaver RFC SDK 7.10 (short: NW RFC SDK). Compared to the “classical” RFC SDK (which has been available since SAP R/3 Release 2.1!) this new version brings a substantial set of new features and simplifications, which we hope will make the life of C developers, who need to interface with SAP systems, much easier. This blog aims at giving an overview over these features. The first thing to point out is: the NW RFC SDK has been a complete re-design from scratch.

This allowed to throw over board some old ballast (e.g support for COM/DCOM) and to make things easier at those points, where the old architecture had made the handling of certain tasks tedious and complicated (e.g the processing of nested structures). However this also means that the API has changed incompatibly. So people, who want to migrate existing RFC applications to the new SDK, will need to adjust their coding as well as their “way of thinking”: some things are now achieved by quite different concepts, especially in handling the input/output parameters and in the RFC Server case. So it’s not just a matter of “replacing this function call by that one”. The new SDK now offers virtually all possibilities, which the R/3 kernel on the other side supports. E.g when connecting to a 7.00 backend, you can work with “ABAP Messages” (E-, A- and X-Messages); however, this feature is not yet available with a 4.6C backend, simply because the kernel doesn’t support it yet.

One other implication of the fact that the implementation of the RFC protocol in the R/3 kernel has changed over time, is: we didn’t want to have too many “if-elses” and special treatments in the new code. Therefore the decision was made to “cut off” at a certain point. So the NW RFC SDK only supports backend releases from 4.0B onwards. If you still need to connect to a 3.1I system, you will unfortunately need to stick to the classical SDK.

However we hope this will not be too much a limitation (the number of 3.1I systems is slowly approaching zero) and the advantages gained by this decision will benefit 99% of the users. So what are now the improvements that the NW RFC SDK has to offer?. You no longer need to worry about getting the correct structure definitions for your imports, exports and tables. One API call fetches the complete definition of a function module from the backend DDIC and caches it for later use. (Metadata and repository functionality kind of like in JCo.).

Setting and reading parameter values is simplified and unified, which makes it now possible to work with nested structures as well as tables defined under IMPORTING/EXPORTING. No need to worry about codepages anymore.

You simply work with one character data type in your application, and the RFC lib automatically chooses the correct codepage for you, depending on the backend (Western European, Japanese, Kyrillic, Unicode, etc, etc). Working with ABAP Exceptions, ABAP Messages or System Failures is now fully supported. Simplified support for tRFC/qRFC.

It is now possible to create tRFC transactions consisting of multiple steps (function modules). A simpler programming model.

Support for IPv6. And I probably forgot a few The NW RFC SDK can be downloaded as described in note 1025361. Documentation will follow soon (keep an eye on that note for updates). To get started, read the header file “sapnwrfc.h” and the demo programs included in the SDK.

The above note describes also, where to download doxygen documentation and a development guide in PDF format. The first successful projects based upon the NW RFC SDK have been Piers Harding’s: wrappers around the NW RFC SDK, which allow to do RFC communication from within Ruby and Perl programs. We hope that the new RFC SDK, as well as the “scripting connectors”, will get a “warm reception” in the SAP community and will enable many new projects, which would otherwise have been difficult (or impossible) to achieve. And even if you already have a satisfactory RFC application based on the old SDK, it may be worth taking a look at the NW RFC SDK, as it might simplify your coding and ease the maintenance! Resident evil 3 nemesis iso download ps1.

Sap Rfc Sdk 7.11 Unicode Download For Mac Mac

Well, that’s what I can say for a starter. Feedback is of course highly wellcome, but please understand that I have a fulltime job, a wife and a kid to take care of So I may not be too quick in answering all questions. Professional questions and bug reports can of course always be sent to SAP via an OSS message under “BC-MID-RFC”.

The Q&A section of this blog is becoming kind of crowded now, therefore I started a new blog, introducing a couple of upcoming new features of the NW RFC Library: You are all invited to start asking questions there! I went through the functions in the NW SDK, and they seem much much simpler to understand and use compared to the librfc32 library. However, I have a few queries on how the following can be done.

Currently, these are preventing me from re-writing my app to use the NW SDK: (a) no API for determining whether a connection handle is valid. (b) i dont see a way for determining which parameters are optional for an RFC.

(c) also, how do i get the description for a RFC parameter? Will extendedDescription always be a wchar if i use SAPWithUnicode?

(d) there’s no function to convert a GUID to a RFCTID and vice versa (e) in RfcInstallTransactionHandlers, what is the sysId parameter? It wasnt there in librfc32u. Firstly, thanks a lot for replying:). Your comments on (b) and (d) are fine, I was expecting your reasoning, thanks. Regarding (a), let me give you a little more information on my scenario. My application was using connection pooling, and so, when a RFC call had to be made to the backend, I was obtaining a connection from the pool, (my own local pool), first checking if it was valid (using RfcIsValidHandle), and if so, reusing it, else, i would get rid of it and then open a new connection to add to the pool, (or ask for another connection from the pool). Anyway, since RfcIsValidHandle is no longer present, what I have decided to do is, I’ll use RfcGetConnectionAtrributes (I currently dont remember the exact function name in the NW SDK), and will check the return code of that to figure out if the connection is “clean” or not.

My only concern is, that this call will be more expensive than the older RfcIsValidHandle call (though I’m not certain and cant verify until my prototype is ready). Any thoughts? Regarding (c), I actually meant, how to get the comments (Short Text / Long Text) associated with the parameters in the RFC? Earlier that was obtainable via the ParamText field I think.

Once again, thanks a lot for your response 🙂 Mustansir. Hello Mustansir, actually last time I told you nonsense: in RfcInstallTransactionHandlers you need to pass NULL (not “.”) as sysId, if you want the global behaviour Regarding a) I can see your point. However be warned that RfcIsValidHandle only checks, whether the value of that pointer has been obtained from the lib and hasn’t been closed yet. It doesn’t do a ping, so it wouldn’t notice, if a firewall had “silently” cut the connection in the meantime.

We are still discussing this. C) Ah, now I get it. You mean the field “RFCUFUNINT.Paramtext”, which RfcGetFunctionInfoAsTable returned in the old lib. Here the same applies as to the field “Optional”: in order to keep the new lib “lean”, this information has been declared superfluous and has been removed for better efficiency However it is easy to get the information. After RfcGetFunctionDesc, simply call RFCGETFUNCTIONINTERFACE with the same FUNCNAME and evaluate the last two fields of the table “PARAMS”: PARAMTEXT and OPTIONAL. Then you can store the necessary information (plus anything else that you may need) in the RFCPARAMETERDESC.extendedDescription pointer for later reference.

Best Regards, Ulrich. One more thing I forgot to mention in my reply, ragarding the ParamText parameter: some time back we had contacted SAP for information on using the RFC “RPYBORTREEINIT”. We were using this RFC for BAPI browsing, but the way we were interpreting it seemed different from the way SAP GUI was interpreting it, since we were never able to come up with the exact same tree as the BAPI Explorer. We contacted SAP for documentation on how to use and interpret the results of RPYBORTREEINIT. We were told that SAP would not be giving us information or support on it, since RPYBORTREEINIT is not a released RFC. Since then, we have decided to avoid using any un-released RFCs in our application.

Unfortunately, RFCGETFUNCTIONINTERFACE is also an un released RFC. I know that the SDK internally uses this RFC for metadata retrieval, but at least we know that the SDK will be supported 🙂 Thanks, Mustansir.

Hello Mustansir, here is an update of what has happened the last two weeks: 1) First of all regarding the RfcIsValidHandle: we decided that this alone is not of much use: it only checks, whether a handle is still in the RFC librarie’s list of handles. If an application can’t remember, whether it already closed a handle or not, then this application has other problems to worry about 🙂 Therefore the decision was made to offer a new API RfcPing, which really checks the connection on TCP/IP level. This is the only way to determine, whether a connection is still alive.

(RfcGetConnectionAttributes only returns the cached attributes, but a firewall may have closed the connection meanwhile without notifying the two endpoints.) 2) Patch level 1 of the NW RFC SDK will be released soon, probably next week. It’ll contain quite a number of new APIs (and a few bug fixes). For a detailed list see SAP note 1056472, which will be released together with the SDK. For you one new API will be particularly interesting, as you are implementing connection pools: RfcResetServerContext. This basically does what the old RfcCleanupContext did: resetting the user session in ABAP without closing the connection. 3) The Param Short Text (and the “is optional” information) will not be added to the new SDK.

When we voted about this, the main opinion was: this only consumes unnecessary memory and 99% of all users will never need it. So you’ll need to bite into the sour apple and use RFCGETFUNCTIONINTERFACE (But I can console you: that FM hasn’t been changed in decades! So there shouldn’t be any risk. After all: every SAP connector, from the RFC library over JCo and.NET Connector to the Business Connector, depends on that FM, so SAP would never change it, it would just cause too much chaos) Best Regards, Ulrich. Hello Urlich. I have just extracted distribution of NW RFC SDK and compiled companyClient.c without any changes in sources.

But I found the problem. NW RFC required all strings in UTF16, but if run compilation without any defines resulting binary using ASCII characters. So the are problems: 1) Why compilation doesn’t fail without required defines, if only unicode strings required? 2) If I try to compile with -DSAPwithUNICODE on Linux (SuSe 10.2) I get error: ‘U16LITcU’ was not declared in this scope for ‘cU’ macro expansion. I have replaced all ‘cU’ macros to equivalent RfcUTF8ToSAPUC calls in example and they have started working.

Hello Dmitriy, ah, now I see what’s wrong. Yes, if the SAPwithUNICODE is not set, all SAPUC variables are only half as long as they should be, and when the RFC library tries to read the full length, it causes the segmentation fault Ok, the problem with the cU macro can be explained easily: Linux does not have a “wchart” type like the one on Windows. (On Windows wchart is UTF-16, while on Linux it’s a 4-byte UCS4, I believe.) Therefore on Linux an additional step is necessary between the pre-processor and the compiler step.

See notes 1056696 (and 763741) for the details. Best Regards, Ulrich. Hello Yilong, yes, this behavior is indeed a bit unexpected The reason is as follows: the RFC SDK allocates and initializes the memory areas for all skalar params. (In case of CHAR it’s initialized to blanks.) It doesn’t keep track, whether a user changes this memory later via RfcSetChars, and then simply sends all data over to the ABAP side.

Sap Rfc Sdk 7.11 Unicode Download For Mac Download

ABAP apparently doesn’t recognize the initial values (blanks) as an empty field and therefore uses that value instead of the default value In patch level 1 we’ll change this behavior, so that all skalar parameters remain inactive untill RfcSetChars/Int/Num has been called for that parameter. This should then have the expected results! RfcGetVersion has been forgotten in the export list of the dll Will also be fixed in patch 1. Best Regards, Ulrich.

Hello Ulrich. Does NW RFC 7.10 support connection over SapRouters? I have tested connection with rfcping from old rfc: rfcping ashost=/H/10.10.10.1/S/3299/H/192.168.0.1 sysnr=14 They work with success. When I set this connection string in loginParamers for RfcOpenConnenction function, I get following error: RFCCOMMUNICATIONFAILURE LOCATION SAP-Gateway on host cirt1 / sapgw14 ERROR hostname ‘/H/10.10.10.1/S/3299/H/192.168.0.1’ unknown TIME Tue Oct 9 13: RELEASE 640 COMPONENT NI (network interface) VERSION 37 RC -2 MODULE niuxi.c LINE 329 DETAIL NiPGetHostByName: hostname ‘/H/10.10.10.1/S/3299/H/192.168.0.1’ not found SYSTEM CALL gethostbyname COUNTER 2 Which loginParameters needed for connection over SapRouter? Regards, Dmitriy.

Hi all, I guess it’s about time I answer some of the questions that have accumulated in the last couple of weeks First of all: after an unfortunately very long delay we finally finished Patch level 1! Validation is currently in process and I expect the download file to be available on the usual SMP page next week. The problem with the SAPRouter is known for some time now and has been fixed. And the incorrect BCD conversion as well. See note 1056472 for a complete list of patches and new features. Hi Dimitri, yes, unfortunately this one fell through the cracks! Apparently no one took care of that one.

I only noticed during testing last week, and as I didn’t want to delay the release any further (it’s already 5 months late, and some people have been waiting for some of the critical stuff like SAPRouter communication and the BCD conversions!) I let it slip through. However, it’s not going to stay that way Internal tracing is still the weak point and needs to be changed a bit, before it’s in its final state. I hope it’s only a minor blemish.

Best Regards, Ulrich. Hi Dmitriy, there’s not much difference here to the classic RFC SDK: instead of the old RFCOPTIONS you use the new structure RFCCONNECTIONPARAMETER and add an entry like this: RFCCONNECTIONPARAMETER connParam1; connParam0.name = cU(“dest”); connParam0.value = cU(“C11”); And then use RfcOpenConnection instead of the old RfcOpen: handle = RfcOpenConnection(connParam, 1, &errorInfo); The “sapnwrfc.ini” file works just the same as it did in the old RFC SDK. PS: There is a pdf documentation available now for the NW RFC SDK: see – SAP NetWeaver RFC Library. It also documents the ini file. Regards, Ulrich. Thanks for the link on documentation. I have tried DEST parameter and sapnwrfc.ini, but have no success.

I have compiled and runned stfcDeepTableServer.c from examples, add ‘MYSERVER’ RFC destination in SM59. Go in SE37, open SFTCDEEPTABLE functional module, run it in debug mode with specified RFCDestination ‘MYSERVER’ and get response from runned stfcDeepTableServer programm.

So, all configured and working normal. Now I have writen own C-program that uses NW RFC, and try call ‘SFTCDEEPTABLE’ function with RFCDestination ‘MYSERVER’ without succes. I have tried many variants: explicit setting connectionParam, sapnwrfc.ini. All times I get response from original SFTCDEEPTABLE abap function.

How call my RFC server function (in this case ‘SFTCDEEPTABLE’ on RFCDestination ‘MYSERVER’) from my NW RFC client? Regards, Dmitriy. I try invoke function on my destination: RFCERRORINFO errorInfo; RFCCONNECTIONHANDLE handle; RFCCONNECTIONPARAMETER connParam1; connParam0.name = cU(“dest”); connParam0.value = cU(“SPRX”); handle = RfcOpenConnection(connParam, 1, &errorInfo); But RfcOpenConnection returns NULL.

Here content errorInfo: code:3 group:2 key:CALLFUNCTIONSIGNONINCOMPL message:Incomplete logon data. AbapMsgClass: abapMsgType: abapMsgNumber: abapMsgV1: abapMsgV2: abapMsgV3: abapMsgV4: My sapnwrfc.ini (get from documentation): DEST=SPRX PROGRAMID=sprx.rfcexec GWHOST=172.23.1.2 GWSERV=sapgw14 I have created and tested ‘SPRX’ RFCDestination in SM59 with type T and registered mode. And I have successful execution if calling my function from ABAP. What I doing wrong?

Please, help me. Regards, Dmitriy. Hi Dmitriy, yes, multithreading is supported (and it’s even the recommended way to write a server). All you need to do is, to put the RfcRegisterServer and the entire “dispatch loop” into a separate thread.

What will happened if during running of implementation new calls has arrived? They will be queued? And how in NWRFC serve simultaneous calls? As long as the RFCCONNECTIONHANDLE is still busy in your “server function”, the RFC connection on R/3 side will be in “busy state”, so the R/3 kernel cannot send a second request. The R/3 workprocess waits for a specific time (which can be customized via a profile parameter, I think) and if still no free RFC connection for that RFC destination is available after that time, the CALL FUNCTION DESTINATION statement in ABAP will abort with a timeout. Basically, what you have to do is, setup N separate threads, each of which will create a new RFCCONNECTIONHANDLE via RfcRegisterServer and use these handles in an RfcListenAndDispatch-loop.

Then the R/3 side knows there are N active connections which it can use for sending simultaneous RFC requests to your server. I hope I was able to explain this properly?! Best Regards, Ulrich. I have ABAP program that exporting string parameter.

When size of return string above 255 characters function RfcGetStringLength reporting approximately half of source size (for example source size:8921, returning: 4588). RfcGetString and RfcGetChars also return half size of original string, even with right sizes they have called. I have checked TCP traffic, complete string was sended to client. NWRFC client compilled with -DSAPwithUNICODE, I have tested on SuSe Linux 10.3 and Win2000. How to fix this?

Thanx for threading explanations, all works for me. Regards, Dmitriy.

Hello Tim, there are several possibilities here: the easiest is probably to just use RfcSetString(functionHandle, “YIELD”, “123.45”, 6, &errorInfo); The RFC library will then automatically convert the string “123.45” to a BCD number. Using RFCDECF16 is also possible. For converter functions (int/float/double to DECF16 and vice versa) see the header file sapdecf.h, which comes with the NW RFC SDK. You may also use RfcSetFloat, but here there is the problem that the conversion of a “double” (which is IEEE4 floating point) to a BCD number (which is “fixed point”) will lead to rounding inaccuraries. Regards, Ulrich.

Hello Bartlomiej, I think I finally know what the problem is. Of course using tables in RFC is no problem, that’s a standard feature. The problem rather seems to be an inconsistency in your DDIC. I tried to reproduce it here, but our 6.40 test system doesn’t have the types “CCVXMATNRTAB” and “DATUMRANGETAB”?! Are these standard SAP types or custom developments? Anyway, I think one of these types must be broken: the error message “non-unicode length is too small” is throw, if the total length of a structure is smaller than the sum of all field-lengths of that structure. So something must be incorrectly defined in the DDIC here.

To test whether this theory is ok, can you change the two tables to TYPE BAPIRET2? This is an old structure, that should be ok in any case. Once we know which of the types has a problem, we can then take a closer look at it. Regards, Ulrich.

Hello Bartlomiej, very strange. No idea at the moment. Can you find any log messages regarding this error in the backend’s system log (SM21) or shortdump log (ST22)?

I know that during logon the function module “RFCPING” is executed. Perhaps the name of that function module is for some reason truncated to “R”, so that the backend no longer recognizes it?! Has the backend been converted from Unicode to non-Unicode recently? In that case a possible explanation would be, that your RFC program still “thinks” the system is Unicode and consquently sends the function module name in Unicode format (two bytes per char). That would then be 52 00 46 00 43 00 50 00 49 00 4E 00 47 00 R F C P I N G The backend system (being now non-Unicode) would then interprete the second byte of the character “R” as the terminating zero of the string 🙂 In that case a simple restart of the RFC application would suffice: it should then fill it’s metadata cache freshly from the backend’s DDIC and recognize the system as non-Unicode. Regards, Ulrich.

I’ve write desktop application that using NW RFC library. On many computers under WinXP, Win2k, Vista all work correct. But I have one client under Vista Home Premium, that can’t connect to R/3 via NW RFC. Here error from RfcOpenConnection: RC:0 code:1 group:4 key:RFCCOMMUNICATIONFAILURE message: LOCATION CPIC (TCP/IP) on local host with Unicode ERROR gethostname failed TIME Fri Mar 14 08: RELEASE 710 COMPONENT CPIC (TCP/IP) with Unicode VERSION 3 RC 485 MODULE r3cpic.c LINE 11417 SYSTEM CALL NiMyHostName COUNTER 3 abapMsgClass: abapMsgType: abapMsgNumber: abapMsgV1: abapMsgV2: abapMsgV3: abapMsgV4: NW RFC version 7.10 patch 1 All connection parameters is correct. From this computer user can connect to R/3 via SAP Logon.

Connection will performed via SAP Routers. Best wishes, Dmitriy. Hello, i built a small.net wrapper for the nwrfcsdk using C/CLI and Visual Studio 2008. If i use this wrapper from within my c# application sometimes i get the exception “AccessViolationException” at RfcListenAndDispatch. This exception means that there is something reading or writing from/to protected memory. At first i thought that this can be some threading issue, but recently i saw it with one single thread too. I’m using the nwrfcsdc 7.1 and VisualStudio 2008.

Are there any problems of the nwrfcsdk in combination with.Net? Hello Roland, there’s one known problem regarding the combination NW RFC SDK + VisualStudio 2008: if you use some of the functions from sapuc.h (e.g strlenU, printfU, etc), it will lead to an access violation. Therefore my first guess is, that one of your server implementation functions (which get called internally from RfcListenAndDispatch) is using such a function. Solution: replace the “U-functions” from sapuc.h with the corresponding Windows “w-functions” from wchar.h. (E.g wprintf instead of printfU.) On Windows the SAPUC data type is equivalent to wchart. Another question: why are you using the NW RFC SDK? On service.sap.com/connectors you’ll also find a “SAP.NET Connector”, which eliminates the necessity of writing your own wrapper around the NW RFC SDK.

Regards, Ulrich. Hello Roland, other than that I have no initial ideas. Which means we need a closer analysis Are you able to produce any coredumps/Dr.Watson information, which would show us a stack trace? If you can reproduce it in the debugger, can you set a breakpoint at the beginning and the end of your server implementation?

This would then allow us to isolate the error, i.e. It could be in RfcListenAndDispatch.before. calling the server function, it could be in the server function itself and it could be in RfcListenAndDispatch.after. calling the server function.

Also if you are able to create a small minimal testcase (without C#), I can analyze it overhere. Regards, Ulrich.

So, it’s ready for testing. You find a little test example at It can be started in both modes, as c executable (Just set compile options to exe) and as.Net executable (Just compile c# project and set the c project’s mode to dll) If i run the example with c, i get no accessviolation, but i recognized that there are internally some c exceptions thrown. Maybe these ones are responsible for the error? If i run the example with c#, i get the accesvialation.

Just click ok on the messagebox very often and you will see it, too. By the way, i don’t have included the nwrfcsdk. You need to copy the library files for 32 bit into /RfcTestLibrary/rfclib/lib32 and the dlls into /Debug and /TestCSStarter/Bin/Debug I hope that is all you need Regards, Roland.

Hello Roland, I tried to look at your example today, but I could not open the project: we don’t have VS 2008, yet, here at SAP However, from looking at the code I have one idea, what the problem might be: In FunctionDescLookup you return one hard-coded function description handle for all lookup requests. You say that the backend system will only execute this one function module on your server, but what if for some strange reason some other function module would be executed on that RFC destination? RfcListenAndDispatch would create an RFCFUNCTIONHANDLE from your sserverFunctionDesc and then try to write the input data of the other function module into that RFCFUNCTIONHANDLE, which could easily lead to a crash. And no one can guarantee, that the SAP system sends only requests for this one function module to the RFC destination of your server program!

I recommend to check the functionName parameter inside FunctionDescLookup and return RFCNOTFOUND, if the name doesn’t match the expected function name. That will eliminate problems like the above. If that doesn’t fix the problem, there are a few other points to try: 1. If it is really the case, that the application doesn’t crash as C application, only as C#, then this seems to indicate, that the problem is not inside the sapnwrfc.dll, but in the interoperability of C and C#.

But that would be for Microsoft to debug, not us 2. Do try to create a crash dump file using the Microsoft tools described at I can send you a debug version of sapnwrfc.dll and a pdb file, then we can see the exact stack trace at the point of the crash.

Best Regards, Ulrich. Hello Ulrich, thank you for the debug dll, but my problem is now i can’t execute my program with it. Each time i wan’t to start it with the debug dll and lib file, a error message pops up. It says that the programm requested an unusual abort. May be it can’t load the dll, or something? I uploaded the testproject for VisualStudio 2005 for you:.

You have to copy the rfc sdk files into the directorys described some posts ago. I will try it again tomorrow, maybe i will get it working then. Regards, Roland.

Hello Roland, I’ve seen the error about the “abort in an unusual way” before. So far it has always been caused by this: the correct version of the C runtime (msvcrt80.dll) was missing on that computer. But in your case the correct version must be installed, as otherwise the optimized sapnwrfc.dll wouldn’t work either?!

Ah, wait a moment: please check your project properties under “C/C – Code Generation”. Does “Runtime Library” say “Multi-threaded Debug DLL (/MDd)”? In this case switch it to “Multi-threaded DLL (/MD)”, that should fix it.

Windows can’t run a process in debug mode, if two modules have different runtime settings. So your executable needs to use the same setting as the sapnwrfc.dll, which was compiled with /MD. I looked at the VS2005 project and I have another idea, what you could try: I noticed that in the Preprocessor settings the “Preprocessor Definitions” include only WIN32 and DEBUG. You also need to specify SAPwithUNICODE here, otherwise all SAPUC data types have only one byte per char instead of two. This would then explain the sporadic crashes: lets say your function module has a CHAR10 field. As long as this field is filled with only 5 chars, everything is fine, but when a funciton call arrives from the backend, where this field is filled with 6 or more chars, the RFC lib will write beyond the boundaries of the array, when filling the data container, and this will cause the access violation.

And also set the definition SAPonNT. Best Regards, Ulrich. Hello Ulrich, ok, i tried this now with some combinations of compile parameters, nothing seems to work here. Maybe the problem is not the sapnwrfc.dll but one of the other dlls. Maybe they are incompatible with the current debug version?

In the meantime i have tried to wrap some parts of the nwrfc sdk with pure.Net interop, meaning that i don’t use any c/cli code and let.Net doing all the work for me. Most wrappers i saw are based on this technique, so that should work fine.

But after executing a example program i got the same AccessViolationException, on the same place as before. So, it seems to be an error in.Net or in the rfcsdk.

We’ve desided to downgrade to the old version of the sdk, because we need the rfc-functionality very soon. Regards, Roland. Hello Dev, yes, SAPwithUNICODE is mandatory! Internally the NW RFC library uses two-byte-per char for everything.

So if you omit that pre-processor parameter, it means that you are passing arrays of size one-byte-per char to the lib, and it will certainly write past the end of an array at some point. Note however, that this does not effect the ability to communicate with non-Unicode backends: the NW RFC lib (and your programs) work the same independently of whether the backend is Unicode or non-Unicode. (This is one of the big benefits in my opinion: one program supports several backend versions and no adjustment of the external program is necessary, if backends get upgraded.) Regards, Ulrich.

Hello Ken, yes, the NW RFC lib can be used for communication with 4.6C backends, and this is fully supported. However in practise there may be a few problems: 4.6C is a “special” release in so far as there are two “flavors” of this release in existence: o the original 4.6C (4.6C kernel + 4.6C ABAP part) o 4.6C updated with a backward compatible 4.6D kernel The NW RFC lib (or more precisely the function RfcGetFunctionDesc) uses a DDIC feature, which has changed between ABAP 4.6C and 4.6D. In the beginning the distinction between these two cases was not yet done correctly in the NW RFC lib. See also SAP Note 1056472, point 10, where one particular problem has been fixed for patch level 1. Another problem with 4.6C/4.6D has just been discovered last week and will be patched with patch level 3. In general I have to say: it may happen that the function RfcGetFunctionDesc fails when trying to retrieve a function metadata description from a 4.6C or 4.6D DDIC.

If you find during testing that one of the function modules your application wants to use, is affected by this problem, just open a message under BC-MID-RFC, so we can fix it. Best Regards, Ulrich. Hello Shlom, the answer to your question could be “SNC”. SNC is to RFC, what https is to http. You need another library (the sapcryptolib or third-party implementations like Secude, Kerberos, Microsoft NTLM) and a corresponding certificate. Also the backend system needs to be setup to use the same mechanism. Details can be found in the “SNC User’s Guide”: – Security in Detail – Secure User Access – Authentication & Single Sign-On.

Once SNC is set up on R/3 side, it’s quite easy to use on RFC library side. (See the hints in sapnwrfc.h or also my article in the March/April issue of SAP Professional Journal) Best Regards, Ulrich. Hello George, the answer is easy: you should no longer need to set any codepages, therefore the NW RFC lib does no longer allow you to set it! The lib automatically chooses the correct codepage for the current backend and that should solve all problems (in theory). At most it should be necessary to set the correct logon language (like JA for Japanese) to give the RFC lib a hint, if multiple languages are possible.

If you think, something doesn’t work right in your particular scenario and you can’t get the data accross the line correctly, you should open a support ticket on BC-MID-RFC, so we can investigate it closer. Regards, Ulrich. Hello Pol, in fact, we already found a memory leak in RfcCloseConnection, which will be fixed in the upcoming 7.11 patch level 1. It could be, that this is the same thing you are reporting. However, the one that got fixed now, is only a few bytes.

You would need a large number of iterations of your loop to actually feel it. So either you are opening&closing A LOT of connections, or you still found something different?! In any case you should repeat your test after the next patch (which will come out in one or two weeks), and if it didn’t go away, we’ll need to profile it in more detail. Best Regards, Ulrich. Hello Ulrich, Thank for your response, I’m waiting impatiently this new patch, I’m writing a software for real time data bases synchronisation between SAP systems and external data bases.

This software must run 24/24 hours and 7/7 days, it’s the reason why I can not have a memory leak. In this software, there are a lot of threads some of them use a client connexion for data from external data bases to sap, and the others are server connexions for datas from SAP to external data bases.

I think that there is probably a another problem with RfcInvoke when we use it with a large amount of data parameters. I will before check my software before post a new message on the blog, if this is right.

Thanks in advance for your help. Hi: I need to expose SAP remote function calls to Visual Basic 6 applications using nwrfcsdk. However, programmers of VB6 don’t want to directly call SAP rfc functions and send/receive SAP data formats, they would like to call a C DLL COM which calls the nwrfcsdk library, moves data to a C array of structures and pass it to VB programs. Before trying to attempt a solution to this requirement in the suggested way, I would like to know if it’s a recommended way or if there is a better and optimal one. If yes/no, I would like to receive some guidelines/recommendations before starting.

My best regards.

Single-page HTML. DIF/DIX, is a new addition to the SCSI Standard and a Technology Preview in Red Hat Enterprise Linux 6. DIF/DIX increases the size of the commonly used 512-byte disk block from 512 to 520 bytes, adding the Data Integrity Field (DIF).

The DIF stores a checksum value for the data block that is calculated by the Host Bus Adapter (HBA) when a write occurs. The storage device then confirms the checksum on receive, and stores both the data and the checksum. Conversely, when a read occurs, the checksum can be checked by the storage device, and by the receiving HBA. Linux containers provide a flexible approach to application runtime containment on bare-metal systems without the need to fully virtualize the workload.

Red Hat Enterprise Linux 6 provides application level containers to separate and control the application resource usage policies via cgroups and namespaces. This release includes basic management of container life-cycle by allowing creation, editing and deletion of containers via the libvirt API and the virt-manager GUI. Linux Containers are a Technology Preview. Prior to Red Hat Enterprise Linux 6.5 release, the Red Hat Enterprise Linux High Availability Add-On was considered fully supported on certain VMware ESXi/vCenter versions in combination with the fencescsi fence agent. Due to limitations in these VMware platforms in the area of SCSI-3 persistent reservations, the fencescsi fencing agent is no longer supported on any version of the Red Hat Enterprise Linux High Availability Add-On in VMware virtual machines, except when using iSCSI-based storage. See the Virtualization Support Matrix for High Availability for full details on supported combinations.

Previously, when MAILTO recipients were set in the /etc/sysconfig/packagekit-background file, the /etc/cron.daily/packagekit-background.cron script only checked for the return value from the pkcon command before trying to send email reports. As a consequence, two unnecessary empty emails were sent under certain circumstances. With this update, the $PKTMP file is not attempted to be sent by email if the files is empty, and only emails with useful information are now sent in the described scenario. Some servers use network cards that take a very long time to initialize since the link is reported as being available. Consequently, the download of the kickstart file failed. This update re-adds support for the 'nicdelay' installer boot option by using NetworkManager's feature of checking the gateway with a ping before the device is reported as connected.

As a result, for servers with network cards taking a very long time to initialize, the 'nicdelay' boot option can be used to prevent kickstart download from failing. This issue has been fixed by adding the 'AUTOFS' prefix to the affected environment variables so that they are not used to subvert the system. A configuration option ('forcestandardprogrammapenv') to override this prefix and to use the environment variables without the prefix has been added. In addition, warnings have been added to the manual page and to the installed configuration file.

Now, by default the standard variables of the program map are provided only with the prefix added to its name. The autofs program did not update the export list of the Sun-format maps of the network shares exported from an NFS server. This happened due to a change of the Sun-format map parser leading to the hosts map update to stop working on the map re-read operation. The bug has been now fixed by selectively preventing this type of update only for the Sun-formatted maps.

The updates of the export list on the Sun-format maps are now visible and refreshing of the export list is no longer supported for the Sun-formatted hosts map. Previously, the 'slip' option was not handled correctly in the Response Rate Limiting (RRL) code in BIND, and the variable counting the number of queries was not reset after each query, but after every other query. As a consequence, when the 'slip' value of the RRL feature was set to one, instead of slipping every query, every other query was dropped.

To fix this bug, the RRL code has been amended to reset the variable correctly according to the configuration. Now, when the 'slip' value of the RRL feature is set to one, every query is slipped as expected. Previously, when the resolver was under heavy load, some clients could receive a SERVFAIL response from the server and numerous 'out of memory/success' log messages in BIND's log. Also, cached records with low TTL (1) could expire prematurely. Internal hardcoded limits in the resolver have been increased, and conditions for expiring cached records with low TTL (1) have been made stricter.

This prevents the resolver from reaching the limits when under heavy load, and the 'out of memory/success' log messages from being received. Cached records with low TTL (1) no longer expire prematurely.

The bind-dyndb-ldap library incorrectly compared current time and the expiration time of the Kerberos ticket used for authentication to an LDAP server. As a consequence, the Kerberos ticket was not renewed under certain circumstances, which caused the connection to the LDAP server to fail. The connection failure often happened after a BIND service reload was triggered by the logrotate utility. A patch has been applied to fix this bug, and Kerberos tickets are correctly renewed in this scenario. Previously, when the chkconfig utility modified a file in the /etc/xinetd.d/ directory, it set the file permissions to '644' and the SELinux context to 'root:objectr:etct'.

Such permissions, however, do not adhere to the Defense Information Systems Agency's (DISA) Security Technical Implementation Guide (STIG), which requires files in /etc/xinetd.d/ to be unreadable by other users. With this update, chkconfig ensures that the xinetd files it modifies have the '600' permissions and the correct SELinux context is preserved. Previously, attempts to mount a CIFS share failed when the system keytab was stored in a non-default location specified using the defaultkeytabname setting in the /etc/krb5.conf file, even when the user provided the correct Kerberos credentials. However, mounting succeeded when defaultkeytabname pointed to the default /etc/krb5.keytab file. The cifs.upcall helper process has been modified to respect non-default keytab locations provided using defaultkeytabname. As a result, CIFS mount now works as expected even when the keytab is stored in a non-default location. Previously, the gfs2convert utility or a certain corruption could introduce bogus values for the ondisk inode 'digoalmeta' field.

Consequently, these bogus values could affect GFS2 block allocation, cause an EBADSLT error on such inodes, and could disallow the creation of new files in directories or new blocks in regular files. With this update, gfs2convert calculates the correct values. The fsck.gfs2 utility now also has the capability to identify and fix incorrect inode goal values, and the described problems no longer occur. The gfs2quota, gfs2tool, gfs2grow, and gfs2jadd utilities did not mount the gfs2 meta file system with the 'context' mount option matching the 'context' option used for mounting the parent gfs2 file system. Consequently, the affected gfs2 utilities failed with an error message 'Device or resource busy' when run with SELinux enabled. The mentioned gfs2 utilities have been updated to pass the 'context' mount option of the gfs2 file system to the meta file system, and they no longer fail when SELinux is enabled. Earlier when using the UDP unicast (UDPU) protocol, all messages were sent to all the configured members, instead of being sent to only the active members.

This makes sense for merge detection messages, otherwise it creates unnecessary traffic to missing members and can trigger excessive Address Resolution Protocol (ARP) requests on the network. The corosync code has been modified to only send messages to the missing members when it is required, otherwise to only send messages to the active ring members. Thus, most of the UDPU messages are now sent only to the active members with an exception of the messages required for proper detection of a merge or a new member (1-2 pkts/sec). A previous update brought in a change in the kernel introducing the intelpstate driver, incompatible with how scaling was managed until Red Hat Enterprise Linux 6.7. Consequently, the cpuspeed service printed needless error messages during system boot and shutdown. With this update, platforms using intelpstate support only the 'performance' and 'powersave' scaling governors, the default option and default state being 'powersave'.

If the user has set a different governor from the above-mentioned, for example 'ondemand' or 'conservative', they have to edit the configuration and choose one from the two, 'powersave' or 'performance'. In addition, needless error messages are no longer returned. A prior update of the AMD64 and Intel 64 kernels removed the STACKFAULT exception stack. As a consequence, using the 'bt' command with the updated kernels previously displayed an incorrect exception stack name if the backtrace originated in an exception stack other than STACKFAULT.

In addition, the 'mach' command displayed incorrect names for exception stacks other than STACKFAULT. This update ensures that stack names are generated properly in the described circumstances, and both 'bt' and 'mach' now display correct information. Attempting to run the crash utility with the vmcore and vmlinux files previously caused crash to enter an infinite loop and became unresponsive. With this update, the handling of errors when gathering tasks from pidhash chains during session initialization has been enhanced. Now, if a pidhash chain has been corrupted, the patch prevents the initialization sequence from entering an infinite loop.

This prevents the described failure of the crash utility from occurring. In addition, the error messages associated with corrupt or invalid pidhash chains have been updated to report the pidhash index number. Previously, any target port with the ALUA preference bit set was given a higher priority than all other target ports. Consequently, when a target port had the preference bit set, multipath did not balance load between it and other ports that were equally optimized. With this update, the preference bit only increases the priority of paths that are not already optimized.

Now, if the preference bit is set on a non-optimized port, the port is used. However, if the preference bit is set on an optimized port, all optimized ports are used, and multipath loads balance across them.

Previously, the libmultipath utility was keeping a global cache of sysfs data for all programs, even though this was only necessary for the multipathd daemon. As a consequence, a memory error could occur when multiple threads were using libmultipath without locking. This led to unexpected termination of multithreaded programs using the mpathpersistentreservein or mpathpersistentreserveout functions. With this update, only multipathd uses the global sysfs data cache, and the described crashes are thus avoided.

Previously, the first time the multipath utility recognized a path device, the path device was not claimed in the udev utility, and other programs could race multipath to claim it. As a consequence, multipath systems could fail to boot during installation. With this update, the multipathd daemon now checks the kernel command line on startup. If it has recognized any parameters with a World Wide Identifier (WWID) value, it adds those WWIDs to the list of multipath WWIDs.

Devices with those WWIDs are thus claimed the first time they are recognized. As a result, if multipath systems do not boot successfully during installation, users can add mpath.wwid=WWID to the kernel command line to work around the problem. When network parameters were specified on the kernel command line, dracut only attempted to connect to iSCSI targets provided the network could be brought up. Consequently, for misconfigured networks, iSCSI firmware settings or iSCSI offload connections were not explored. To fix this bug, dracut now attempts to connect to the iSCSI targets even if after a certain timeout no network connection can be brought up. As a result, iSCSI targets can be connected even for misconfigured kernel command-line network parameters. Previously, if the superblock of an ext2, ext3, or ext4 file system contained a 'last mount' or 'last check' time which was set in the future, the e2fsck utility did not fix the error in 'preen' mode.

As a consequence, an incorrect system clock could stop the boot process and wait for an administrator intervention due to a failed boot-time file system check. With this update, these time-stamp errors are fixed automatically in 'preen' mode, and the boot process is no longer interrupted in the described situation. Previously, the libsysfs packages were not listed as a dependency for the edac-utils packages. As a consequence, on systems where the libsysfs packages were not installed independently, the edac-utils packages were not fully functional due to the lack of libraries provided by libsysfs. This update adds libsysfs to the list of dependencies for edac-utils. As a result, libsysfs can be automatically installed together with edac-utils, thus providing all the libsysfs libraries necessary for edac-utils to work properly on all systems.

GDB uses the '(anonymous namespace)' string in the string representation of any symbol defined in an anonymous namespace. However, the linespec parser did not recognize that this string was a necessary component, symbol lookups failed and breakpoints could not set or reset on symbols defined in anonymous namespaces. To fix this bug, the anonymous namespace recognition has been abstracted to clarify the unique role of this representation requirement.

Additionally, the linespec parser has been updated to properly deal with the required string. As a result, breakpoints on symbols in anonymous namespaces can be properly set or reset by GDB. Under certain conditions, while attaching to a process, GDB can perform the initial low level ptrace attach request, but the kernel previously refused to let the debugger finish the attach sequence. Consequently, GDB terminated unexpectedly with an internal error. Now, GDB handles the described scenario gracefully, reporting back to the user that the attach request failed. As a result, the user receives a warning noting that GDB was unable to attach because permission was denied. In addition, the debugging session is not affected by this behavior.

When a breakpoint was pending and a new object file appeared and this new object file contained multiple possible locations for the breakpoint, GDB was being too strict on checking this condition, and issued an internal error. The check for multiple locations for the same breakpoint has been relaxed, and GDB no longer issues an internal error in this scenario. The user now receives a warning mentioning that more than one location for the breakpoint has been found, but only one location will be used. The grep packages have been upgraded to upstream version 2.20, which provides a number of bug fixes and enhancements over the previous version. Notably, the speed of various operations has been improved significantly.

Now, the recursive grep utility uses the fts function of the gnulib library for directory traversal, so that it can handle much larger directories without reporting the 'File name too long' error message, and it can operate faster when dealing with large directory hierarchies. (BZ#982215, BZ#1064668, BZ#1126757, BZ#1167766, BZ#1171806). Previously, the GNOME Desktop Virtual File System (GVFS) trash implementation did not take access permissions into consideration when creating file monitors for mount points. Consequently, file monitors were polling files without read access permissions, preventing AutoFS mount points from expiring as they normally would when not in use for some time. With this update, the trash implementation no longer creates file monitors to monitor files without read access permissions. As a result, AutoFS mount points can now freely expire.

The gvfsd-metadata daemon did not correctly handle the situation when an application tried to save a metadata entry larger than the size of a journal file, that is, larger than 32 kB. The daemon wrote all changes from the journal to the metadata database to make more space for the entry and then created a new journal file.

This operation was repeated in an infinite loop unnecessarily, overloading the CPU and disk. With this update, the operation is retried only once. As a result, the metadata entry is not saved if it is too large, and gvfsd-metadata returns a warning instead. Previously, no iptables revision was used for rules that match an ipset.

As a consequence, iptables rules with the match-set option could be added, but not removed again, as the rules could not be located again for their removal. This update adds revision 0 and 1 code patches for libiptSET. As a result, new ipset match rules can now be removed. Please note that adding and removing rules using the match-set option now works with the patch applied, but removing a rule that was added with an earlier version of iptables does not work and cannot be fixed. Use the rule number to remove such rules. The pkg-config (.pc) files for JSON-C were incorrectly placed in the /lib64/pkgconfig/ directory in the 64-bit packages and in the /lib/pkgconfig/ directory in the 32-bit packages.

F 35 fantastiske apple tv-skjermsparer for mac. Consequently, the pkg-config tool was unable to find these files and failed to provide the location of the installed JSON-C libraries, header files, and other information about JSON-C. With this update, the pkg-config files have been moved to the /usr/lib64/pkgconfig/ and /usr/lib/pkgconfig/ directory respectively. As a result, the pkg-config tool now successfully returns information about the installed JSON-C packages. It was found that the Linux kernel KVM subsystem's sysenter instruction emulation was not sufficient. An unprivileged guest user could use this flaw to escalate their privileges by tricking the hypervisor to emulate a SYSENTER instruction in 16-bit mode, if the guest OS did not initialize the SYSENTER model-specific registers (MSRs). Note: Certified guest operating systems for Red Hat Enterprise Linux with KVM do initialize the SYSENTER MSRs and are thus not vulnerable to this issue when running on a KVM hypervisor.

After the user changed into a directory that lacked execution permissions, ksh did not recognize that the change did not happen and that the user was instead still operating in the directory from which the user attempted to change. Also, the 'pwd' utility incorrectly displayed the directory into which the user attempted to change instead of the directory in which the user was actually operating.

This update modifies ksh to verify whether the directory change was successful. As a result, ksh reports an error if the necessary execution permissions are missing. Users of the lasso packages could previously experience several problems related to Red Hat Enterprise Linux interoperability with Microsoft Active Directory Federation Services (ADFS).

Authentication against ADFS failed when using the modauthmellon module. In addition, in Apache sessions, the limit for the number of elements was insufficient and multi-value variables were not supported. Also, the MellonCond parameter did not work when used together with the MellonSetEnv(NoPrefix) parameter. This update fixes the above described problems with ADFS interoperability. The interface configuration of any libvirt domain which was of type='network' and referenced an 'unmanaged' libvirt network had incorrect XML data for the interface transmitted during a migration, containing the 'status' of the interface instead of the name of the network to use ('configuration').

As a consequence, the migration destination tried to set up the domain network interface using the status information from the source of the migration, and the migration thus failed. With this update, libvirt sends the configuration data for each device during migration rather than the status data, and the migration of a domain using interfaces of type='network' now succeeds. Prior to this update, when using the 'virsh save' command to save a domain to an NFS client with the 'root squash' access rights reduction while running the libvirtd service with a non-default owner:group configuration, saving the NFS client failed with a 'Transport endpoint is not connected' error message. This update ensures that the chmod operation during the saving process correctly specifies the non-default owner:group configuration, and using 'virsh save' in the described scenario works as expected. Previously, luci did not allow VM resources to have children resources, and after adding a VM to a service group, the 'add resource' button was removed so that no further resources could be added. However, the GUI could handle configurations that contained resources with children. As a consequence, even though luci supported the aforementioned configurations, the 'add resource' button was removed after adding a VM resource.

With this update, the 'add resource' button is no longer removed when adding a VM resource to a service group.

Sap Rfc Sdk Download

Using the RFC SDK The.NET edition of the SAP OData Connector uses either the Classic, Classic Unicode, NetWeaver, or SOAP RFC APIs to connect to an SAP system. Able clipart for mac powerpoint. The Java edition supports either the SAP JCo (Java Connector) or SOAP RFC API. The ConnectionType specifies the RFC API you want to use to connect. If you are using Classic, Classic_Unicode, or NetWeaver, you must also set Host, User, Password, Client, and SystemNumber.

Clp-610nd driver for mac. Download the latest driver, firmware, and software for your Samsung CLP-610 Color Laser Printer series.This is HP's official website to download drivers free of.

Portable version available = Download the portable version and you can just extract the files and run the program without installation. Mac os x atv third-party svn builds of xbmc for mac.

Mac OS X; MATLAB. Cloud Workgroup users that need access to this driver can download it from the Spotfire Cloud. Postgres Unicode Driver. SAP NetWeaver RFC SDK 7.50, patch level 1 or later (Supported in Spotfire 7.11 LTS hotfix.

Additionally, you will need to obtain the libraries for the SAP SDK. Note: We do not distribute the librfc32.dll or other SAP assemblies. You must find them from your SAP installation and install them on your machine. You can find more information on the dependencies for connecting to the RFC APIs in the following sections. Use the Classic RFC SDK By default, the Classic RFC SDK provided with the non-Unicode library librfc32.dll will be used. To use it, simply place the assembly in a location where it will be accessible at run time, such as the system32 or bin folder.

Using the Classic RFC SDK with Unicode Support To use the Classic RFC SDK provided with the Unicode-supported library librfc32u.dll, set ConnectionType to Classic_Unicode. The following libraries from the RFC SDK must also be available at run time, in addition to librfc32u.dll: • icudt30.dll • icuin30.dll • icuuc30.dll Using the NetWeaver RFC SDK The NetWeaver RFC SDK sapnwrfc.dll can instead be used by setting ConnectionType to NetWeaver. The following libraries from the RFC SDK must be available at run time in addition to use sapnwrfc.dll: • icudt30.dll • icuin30.dll • icuuc30.dll • libicudecnumber.dll • libsapucum.dll Using the SOAP Service If access to one of the RFC SDK libraries is not available, you can connect to the SOAP API, after first enabling Web services on your system.

Sap Rfc Sdk Unicode Download For Mac Mac

To connect, set the RFCUrl to the SOAP service in addition to the ConnectionType, Client, SystemNumber, User, and Password properties. Using the SAP JCo The SAP JCo (Java Connector) JAR file can be used in order to access the RFC SDK used to communicate with SAP. You will need to include the sapjco3.jar in your build path and make sure the native library location for the JAR is set to the folder containing the sapjco3 library. On Windows machines this can simply be your system32 or syswow64 folder. On Linux, this will be the libsapjco3.so file. On Mac OS X, this will be the libsapjco3.jnilib file.